One of the issues that I often bemoan in relation to creating Self-Mapped Learning Pathways lessons is how there really isn’t simple technology that will let you quickly build non-linear, interactive, open-ended content. I have been keeping my eye on H5P, and building a few things with Twine or SAP Chatbots, so I decided to take them all out for a spin in trying to build something that allows for learners to build their own learning pathway.

So how did it turn out? In general, there were some interesting affordances of the tools, but they still don’t get me to where I would like to be with the lesson design. And none of them really did much for the open-ended part. But I did create some OERs that you can use if you like (details at the end). First, some of the process.

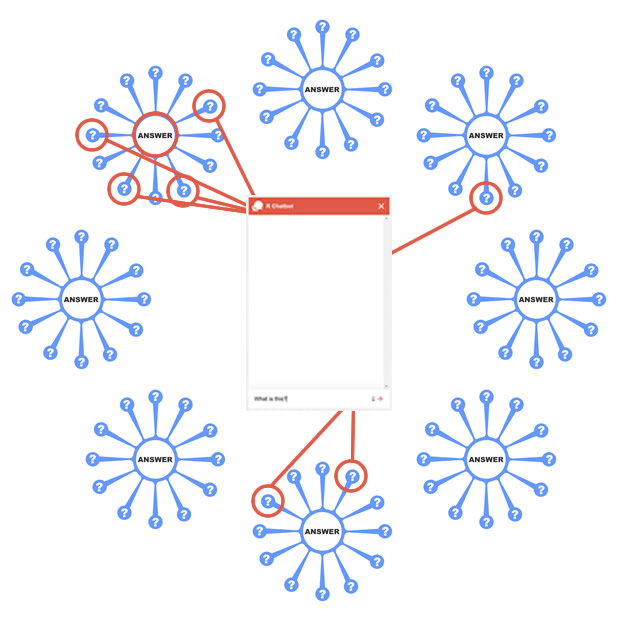

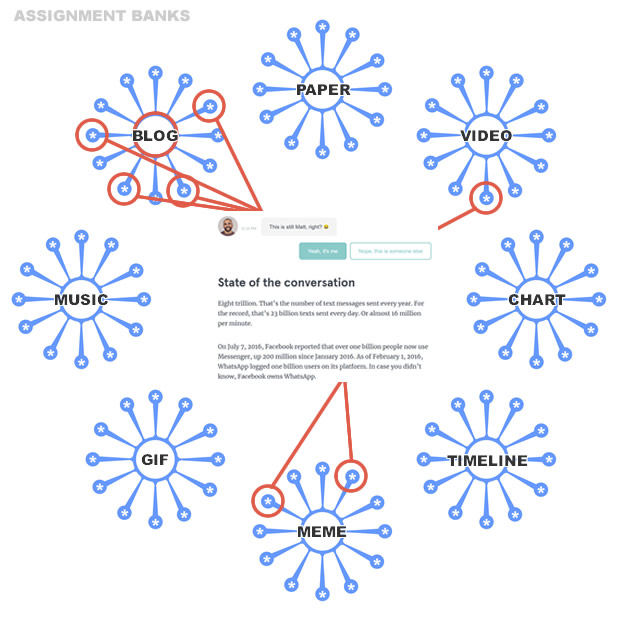

SAP Chatbots have some pretty robust tools for creating interactive chats. In theory, I think I could have built everything within a bot, but didn’t get around to it this time because it would have taken some deep dives. I’m also not convinced that a chatbot interface is the way to go, but more about that later. I decided to use a chatbot at a specific point in the learning pathways lesson to help learners think through modality options.

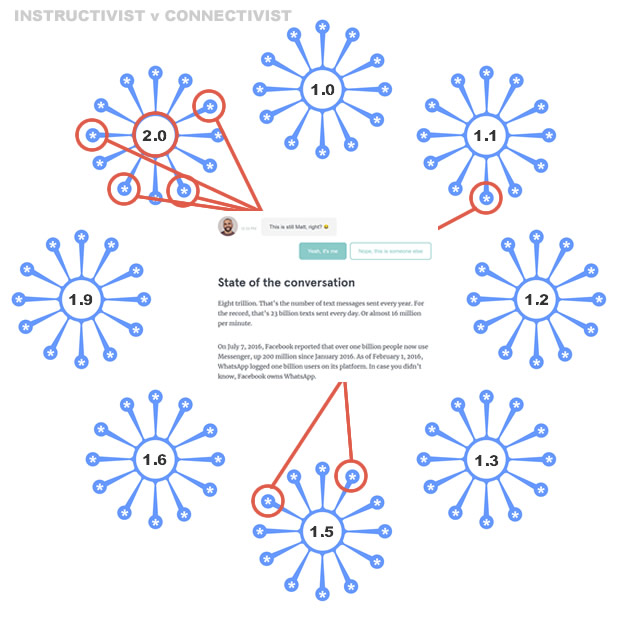

With H5P, I used the Branching Scenario and Course Presentation tools mainly. With H5P, you get a more intuitive interface that looks nice (and we are told is completely accessible), but very little options for customizing anything. I couldn’t change the look, program variables, or embed things like the SAP Chatbot anywhere into the lesson. So I came up with a way to get around that. It seems to be a good basic option for those that don’t want to get into the weeds of programming variables, but it still is mainly a way to create a Choose Your Own Adventure book. Which is what some call “personalized” these days, even though its really not.

With Twine, there were many options to customize, add variables, manipulate code, and embed what you want. I am not sure how accessible everything in Twine is, but it does give you a lot more flexibility for customization. Also, the option to set variables means you can let learners choose some options that would reformat what they see based on their selections. I did a little bit of that, but I need to dig into this some more. Since I could embed more things in Twine, I was able to build the entire lesson from beginning to end in Twine (with a chatbot embedded near the beginning, and an H5P assessment embedded at the end of one modality).

So I ended up with two different versions of the same lesson that will allow you to compare the two options. Before I share those, a few thoughts on building the lesson.

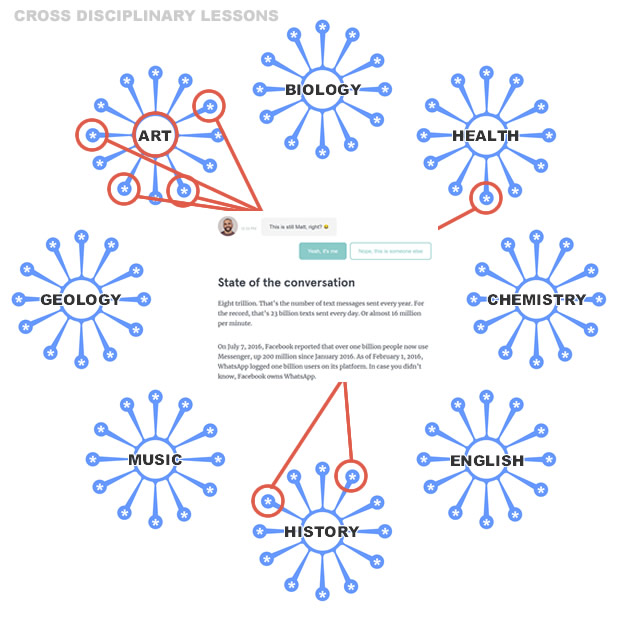

It took a long time to think through the options and build the simple choices that I did below. A lot of this could be attributed to the fact that I was building an entire lesson from scratch. I decided to dig some into Goals, Objectives, and Competencies because so many of my students struggle with these concepts. Someone that already has a complete lesson built would probably save a lot of time on that front.

Also, I will say that I ran out of time to re-record the videos. There are some mistakes and poorly chosen words here and there (like me saying “behaviorist” when I mean “behavioral”). Maybe I will fix that in the future.

Ultimately, it a took a lot of time to build the options and think through how to navigate the options, while also trying to find ways to get people who choose to take their own path to the tools they need. This is the open ended part I still struggle with. It really comes down to this: learners will step out on their own into the garden, or not. I can’t do much to pre-program those options into a system. I could be there in person to discuss their pathways if they needed it, but that is hard to pre-design for. You would spend hours creating the ability for each option, and then maybe have one or two people choose it.

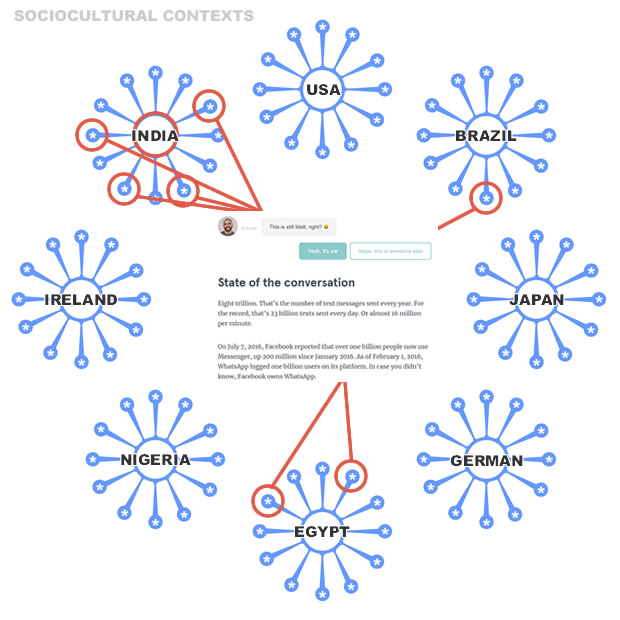

I should point point out that this lesson uses a modification of the course metaphor idea that asks learners to choose between the “sidewalk” or the “garden” (or to mix both if they like). The metaphor is based on the botanical garden concept, where sidewalks guide those on pre-determined paths to show the highlights of the garden, while the gardens themselves can be explored as you like by leaving the sidewalk. The sidewalk represents the instructor-centered pathway, while the garden represents the student-centered, heutagogical option.

What I don’t like is the modular way all of these parts feel. I wish there was a way to combine all of the elements so that learners only see one page that re-loads new content based on their input. In other words, instead of a chatbot that tries to mimic human conversation (which some like, but others don’t), why not have a conversational interface that would ask questions and then supply new content, videos, activities, etc based on the learner input?

Plus, chatbots tend to be cloud-based, meaning everything you put in them is stored on someone else’s computer. Why can’t that be a local tool that protects your privacy better?

Anyways, these lesson are some basic ideas of what a self-mapped learning pathways micro-lesson could look like. I still feel there is more that could be done with the garden pathway in using the coding/variables option in Twine. I also utilized some tools like Hypothes.is and Wakelet in the garden modality (just because I like them), but I need to ponder more about how those tools can be utilized as a mapping space themselves.

So here is what I have:

Goals, Lesson, and Competencies Self-Mapped Learning Pathways micro-lesson in Twine

or

Goals, Lesson, and Competencies Self-Mapped Learning Pathways micro-lesson in H5P

The H5P tool does use plain html pages for the first three pages – you will see when the switch happens. Also, the Twine tool still uses some H5P activities for the sidewalk modality assessments at the end of that modality. Since this is a stand-alone lesson, I needed some kind of assessment option and decided to re-use what I had created already.

A few design notes: The Sidewalk modality is designed so that there is always a main option to choose from for those that need the most guidance, but also links to other options for those that want to skip around. My goal is to always encourage non-linear thinking and learner choice in small or large ways whenever possible. In the Twine version of the lesson, if you choose the Sidewalk option, that is what you see. If you go to the Sidewalk + Garden option, then there is code that inserts links back to the Garden section into the Sidewalk. This is some of the customization I would like to explore more in the future. Also, the Garden and Sidewalk + Garden option have some examples and ideas for learners to choose from (basically, custom links to Twitter, Wikipedia, etc to show specific evolving searches there). This obviously isn’t much, but it is a self-determined option and therefore I didn’t want to offer too much. But maybe its not enough?

![]() But, this is a full micro-lesson, and I am designating it as an OER with a CC Attribution-NonCommercial-ShareAlike 4.0 International license for those that want to use it:

But, this is a full micro-lesson, and I am designating it as an OER with a CC Attribution-NonCommercial-ShareAlike 4.0 International license for those that want to use it:

- The videos are on YouTube if you just want to use those.

- I have created a zip file with all of the html files that you can download and edit. The Twine file in that zip archive (“goals-objectives-competencies.html”) can be loaded into Twine itself and edited as you need.

- You can also download and update the two H5P files by going either to the full lesson or the assessment portion and clicking on the “Reuse” link in the bottom left corner.

- The chatbot itself can even be forked and customized by creating an account with SAP and using the fork function on the main page for the bot.

I may even create a badge for those that complete the lesson – who knows? If you want to send a few people through the lesson, feel free to do so with the links above. If you want to send a lot of people through it, maybe consider hosting it on your server. :)

Matt is currently an Instructional Designer II at Orbis Education and a Part-Time Instructor at the University of Texas Rio Grande Valley. Previously he worked as a Learning Innovation Researcher with the UT Arlington LINK Research Lab. His work focuses on learning theory, Heutagogy, and learner agency. Matt holds a Ph.D. in Learning Technologies from the University of North Texas, a Master of Education in Educational Technology from UT Brownsville, and a Bachelors of Science in Education from Baylor University. His research interests include instructional design, learning pathways, sociocultural theory, heutagogy, virtual reality, and open networked learning. He has a background in instructional design and teaching at both the secondary and university levels and has been an active blogger and conference presenter. He also enjoys networking and collaborative efforts involving faculty, students, administration, and anyone involved in the education process.