Hopefully by now you have heard about the Dartmouth Medical School Cheating Scandal, where Dartmouth College officials used questionable methods to “detect” cheating in remote exams. At the heart of the matter is how College officials used click-stream data to “catch” so-called “cheaters.” Invasive surveillance was used to track student’s activity during the exams, officials used the data without really understanding it to make accusations, and then students were pressured to quickly react to the accusations without much access to the “proof.” Almost half of those accused (7 of 17 or 41%) have already had their cases dismissed (aka – they were falsely accused. Why is this not a criminal act?). Out of the remaining 10, 9 plead guilty, but 6 of those have now tried to appeal that decision because they feel they were forced to plead guilty. FYI – that is 76%(!) that are claiming they are falsely accused. Only one of those six wanted to be named – the other 5 are afraid of reprisals from the College if they speak up.

That is intense. Something is deeply wrong with all of that.

The frustrating thing about all of this is that plenty of people have been trying to warn that this is a very likely inevitable outcome of Learning Analytics research studies that look to detect cheating from the data. Of course, this particular area of research focus is not a major aim of Learning Analytics in general, but several studies have been published through the years. I wanted to take a look at a few that represent the common themes..

The first study is a kind of pre-Learning Analytics paper from 2006 called “Detecting cheats in online student assessments using Data Mining.” Learning Analytics as a field is usually traced back to about 2011, but various aspects of it existed before that. You can even go back to the 1990s – Richard A. Schwier describes the concept of “tracking navigation in multimedia” (in the 1995 2nd edition of his textbook Instructional Technology: Past, Present, and Future – p. 124, Gary J. Anglin editor). Schwier really goes beyond tracking navigation into foreseeing what we now call Learning Analytics. So all of that to say: tracking students’ digital activity has a loooong history.

But I start with this paper because it contains some of the earliest ways of looking at modern data. The concerning thing with this study is that the overall goal is to predict which students are most likely to be cheating based on demographics and student perceptions. Yes – not only do they look at age, gender, and employment, but also a learner’s personality, social activities, and perceptions (did they think the professor was involved or indifferent? Did they find the test “fair” or not? etc).

You can see by the chart on p.207 that males with lower GPAs are mostly marked as cheating, while females with higher GPAs are mostly marked as not cheating. Since race is not considered in the analysis, systemic discrimination could create incredibly racist oppression from this method.

Even more problematic is the “next five steps to data mining databases,” with one step recommending the collection of “responses of online assessments, surveys and historical information to detect cheats in online exams.” This includes the clarification that:

- “information from students must be collected from the historical data files and surveys” (hope you didn’t have a bad day in the past)

- “at the end of each exam the student will be is asked for feedback about exam, and also about the professor and examination conditions” (hope you have a wonderful attitude about the test and professor)

- “professor will fill respective online form” (hope the professor likes you and isn’t racist, sexist, transphobic, etc if any of that would hurt you).

Of course, one might say this is pre-Learning Analytics and the current field is only interested in predicting failure, retention, and other aspects like that. Not quite. Lets look at the 2019 article “Detecting Academic Misconduct Using Learning Analytics.” The focus in this study is bit more specific: they seek to use keystroke logging and clickstream data to tell if a student is writing an authentic response or transcribing a pre-written one (which is assumed to only be from contract cheating).

The lit review of this study also shows that this study is not the only one digging into this idea. The idea goes back several years through multiple studies.

While this study does not get to the same Minority Report-level concerns that the last one did, there are still some problematic issues here. First of all is this:

“Keystroke logging allows analysis of the fluency and flow of writing, the length and frequency of pauses, and patterns of revision behaviour. Using these data, it is possible to draw conclusions about students’ underlying cognitive processes.”

I really need to carve out some time to write about how you can’t use clickstream data of any kind to detect cognitive processes in any way, shape or form. Most people that read this blog know why this is true, so I won’t take the time now. But the Learning Analytics literature is full of people that think they can detect cognitive activities, processes, or presence through clickstream data… and that is just not possible.

The paper does address the difficulties in using keystroke data to analyze writing, but proposes analysis of clickstream data as a much better alternative. I’m not really convinced by the arguments they present – but the gist is they are looking to detect revision behaviors, because authentic writing involved pauses and deletions.

Except that is not really true for everyone. People that write a lot (like, say, by blogging) can get to a place where they can write a lot without taking many pauses. Or, if they really do know the material, they might not need to pause as much. On the other hand, the paper assumes that transcription of an existing document is a mostly smooth process. I know it is for some, but it is something that takes me a while.

In other words, this study relies on averages and clusters of writing activities (words added/deleted, bursts of writing activity, etc) to classify your writing as original or copied. Which may work for the average, but what about students with disabilities that affect how they write? What about people that just work differently than the average? What about people from various cultures that approach writing in a different method, or even those that have to translate what they want to write into English first and then write it down?

Not everyone fits so neatly into the clusters.

Of course, this study had a small sample size. Additionally, while they did collect demographic data and had students take self-regulated learning surveys, they didn’t use any of that in the study. The SRL data would seem to be a significant aspect to analyze here. Not to mention at least mentioning some details on the students who didn’t speak English as a primary language.

Now, of course, writing out essay exam answers is not common in all disciplines, and even when it is, many instructors will encourage learners to write out answers first and then copy them into the test. So these results may not concern many people. What about more common test types?

The last article to look at is “Identifying and characterizing students suspected of academic dishonesty in SPOCs for credit through learning analytics” from 2020. There are plenty of other studies to look at, but this post is already getting long. SPOC here means “Small Private Online Course”… a.k.a. “a regular online course.” The basic gist is that they are clustering students by how close their answers are to each other and how close their submission times are. If they get the exact same answers (including choosing the same wrong choice) and turn in their test at about the same time, they are considered “suspect of academic dishonesty.” It should also be pointed out that the Lit Rreview here also shows they are the first or only people to be looking into this in the Learning Analytics realm.

The researchers are basically looking for students that meet together and give each other answers to the test. Which, yes – it is suspicious if you see students turn in all the same answers at about the same time and get the same grade. Which is why most students make sure to change up a few answers, as well as space out submissions. I don’t know if the authors of this study realized they probably missed most cheaters and just caught the ones not trying that hard.

Or… let me propose something else here. All students are trying to get the right answers. So there are going to be similarities. Sometimes a lot of students getting the same wrong answer on a question is seen as a problem to fix on the teaching side (it could have been taught wrong). Plus, students can have similar schedules – working the same jobs, taking the same other classes that meet in the morning, etc. It is possible that out of the 15 or so they flagged as “suspect,” 1 or 2 or even 3 just happened to get the same questions wrong and submit at about the same time as the others. They just had bad luck.

I’m not saying that happened to all, but look: you do have this funnel effect with tests like these. All of your students are trying to get the same correct answer and finish before the same deadline. So its quite possible there will be overlap that is very coincidental. Not for all, but isn’t it at least worth a critical examination if even a small number of students could get hurt by coincidentally turning in their test at the same time others are?

(This also makes a good case for ungrading, authentic assessment, etc.)

Of course, the “suspected” part gets dropped by the end of the paper: “We have applied this method in a for credit course taught in Selene Unicauca platform and found that 17% of the students have performed academic dishonest actions, based on current conservative thresholds.” How did they get from “suspected” to “have performed?” Did they talk to the students? Not really. They looked at five students and felt that there was no way their numbers could be anything but academic dishonesty. Then they talked to the instructor and found that three students had complained about low grades. The instructor looked at their tests, found they had the exact same wrong answers, and… case closed.

This is why I keep saying that Learning Analytics research projects should be required to have an instructional designer or learning research expert on the team. I can say after reviewing course results for decades that it is actually common for students to get the same wrong answers and be upset about it because they were taught wrong. Instructors and Instructional Designers do make mistakes, so always find out what is going on. Its also possible that there was a conversation weeks ago where one student with the wrong information spread that information to several students when discussing the class. It happens.

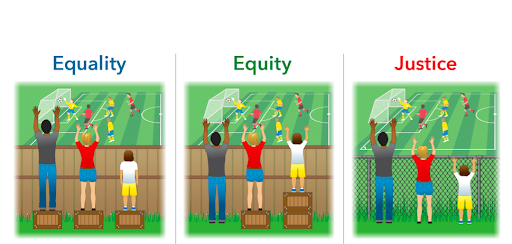

![]() But this is what happens when you don’t investigate fully and assume the data is all you need. Throwing in a side of assuming that cheaters act a certain way certainly goes a long way as well. So you can see a direct line from assumptions made about personality and demographics of who cheaters are, to using clickstream data to know what is going on in the brain, to assuming the data is all you need…. all the way to the Dartmouth Medical School scandal. Where there is at least a 41%-76% false accusation rate currently.

But this is what happens when you don’t investigate fully and assume the data is all you need. Throwing in a side of assuming that cheaters act a certain way certainly goes a long way as well. So you can see a direct line from assumptions made about personality and demographics of who cheaters are, to using clickstream data to know what is going on in the brain, to assuming the data is all you need…. all the way to the Dartmouth Medical School scandal. Where there is at least a 41%-76% false accusation rate currently.

Matt is currently an Instructional Designer II at Orbis Education and a Part-Time Instructor at the University of Texas Rio Grande Valley. Previously he worked as a Learning Innovation Researcher with the UT Arlington LINK Research Lab. His work focuses on learning theory, Heutagogy, and learner agency. Matt holds a Ph.D. in Learning Technologies from the University of North Texas, a Master of Education in Educational Technology from UT Brownsville, and a Bachelors of Science in Education from Baylor University. His research interests include instructional design, learning pathways, sociocultural theory, heutagogy, virtual reality, and open networked learning. He has a background in instructional design and teaching at both the secondary and university levels and has been an active blogger and conference presenter. He also enjoys networking and collaborative efforts involving faculty, students, administration, and anyone involved in the education process.