As the Great OPM Controversy continues to rage, a lot is being said about developing online courses “in-house” (by hiring people to do the work rather than paying a company to do so). This is actually an area that I have a lot of experience in at various levels, so I wanted to address the pros and cons of developing in-house capacity for offering online programs. I have been out of the the direct instructional design business for a few years, so I will be a bit rusty here and there. Please feel free to comment if I miss anything or leave out something important. However, I still want to take a rough stab at a ballpark list of what needs consideration. First, I want to start with three given points:

- Everything I say here is assuming high-quality online courses, not just PowerPoints and Lecture Capture plopped online. But on the other hand, this is also assuming there won’t be any extra expenses like learning games or chat-bots or other expensive toys… errr… tools.

- In most OPM models, universities and colleges still have to supply the teachers, so that cost won’t be dealt with here, either. But make sure you are accounting for teacher pay (hopefully full time teachers more than adjuncts, and not just adding extra courses to faculty with already over-full loads).

- All of these issues I discuss are within the mindset of “scaling” the programs eventually to some degree or another, but I will get to the problems with scale later.

So the first thing to address is infrastructure, and I know there are a wide range of capacities here. Most universities and colleges have IT staff and support staff for things like email and campus computers. If you have that, you can hopefully build off of that. If you don’t…. well, the OPM model might be the better route for you as you are so far behind that you have to catch up with society, not just online learning. But I know most places are not in this boat. Some even already have technology and support in place for online courses – so you can just skip this part and talk directly with those people about their ability to support another program.

You also have to think about the support of technology, usually the LMS and possibly other software. If you have this in place, check to make sure the existing tools have capacity to take on more (they usually have some). If you have an IT department – talk with them about what it would take to add an LMS and any other tools (like data analysis tools) you would like to add. If you are talking one online program, you probably don’t need even one full time position to support what you need initially. That means you can make this a win/win for IT by helping them get that extra position for the ____ they have been wanting for a while if they can also share that position with online learning technology support part-time.

This is, of course, for a self-hosted LMS. All of the LMS providers out there will offer to host for you, and even provide support. It does cost, but shop around and realize there are vendors that will give you good service for a good price. But there are also some that won’t deal with you at all if you are not bringing a large numbers of courses online initially, so be careful there.

Then there is support for students and teachers. Again, this is something you can bundle from most LMS providers, or contract individually from various companies. If you already have student and faculty tech support of some kind on campus, talk with them to see what it would take to support __ number of new students in __ number of new online courses. They will have to increase staff, but since they often train and employ student workers to answer the calls/emails, this is also a win/win for your campus to get more money to more students. Assuming your campus fairly treats and pays its student workers, of course. If not, make sure to fix that ASAP. But keep in mind that this can be done for the cost of hiring a few more workers to handle increased capacity and then paying to train everyone in support to take online learning calls.

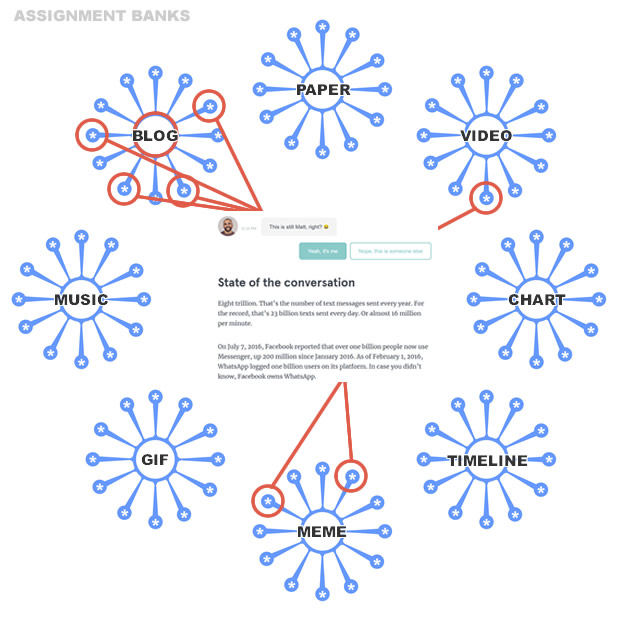

Then there will be the cost of the technology itself. Typically, this is the LMS cost plus other tools and plug-ins you might want to add in (data analytics, plagiarism detection, etc). Personally, I would say to avoid most of those bells and whistles at the beginning. Some of them – like plagiarism detection – are surveillance minded and send the wrong message to learners. Hire some quality instructional designers (I’ll get to that in a minute) and you won’t even need to use these tools. Others like data analytics might be of use down the line, but you might also find some of the things they do underwhelming for the price. With the LMS itself, note that there are other options like Domain of One’s Own that can replace the LMS with a wider range of options for different teachers and students (and they work with single sign on as well). There are also free open-source LMS if you want to self host. Then there are less expensive and more expensive LMS providers. Some that will allow you to have a small contract for a small program with the option to scale, others that want a huge commitment up front. Look around and remember: if it sounds like you are being asked to pay too much, you probably are.

So a lot of what I have discussed is going to vary in cost dramatically, depending on your needs and current capacity. However, if you remain focused on just what you need, and maybe sharing part of certain salaries with other departments to get part of those people’s time, and are also smart about scaling (more on that later), you are still looking at a cost that is in the tens of thousands range for what I have touched on so far. If you hit the $100k point, you are either a) over-paying for something, b) way behind the curve on some aspect, or c) deciding to go for some bells and whistles (which is fine if you need them or have people at your institution that want them – they usually cost extra with OPMs as well).

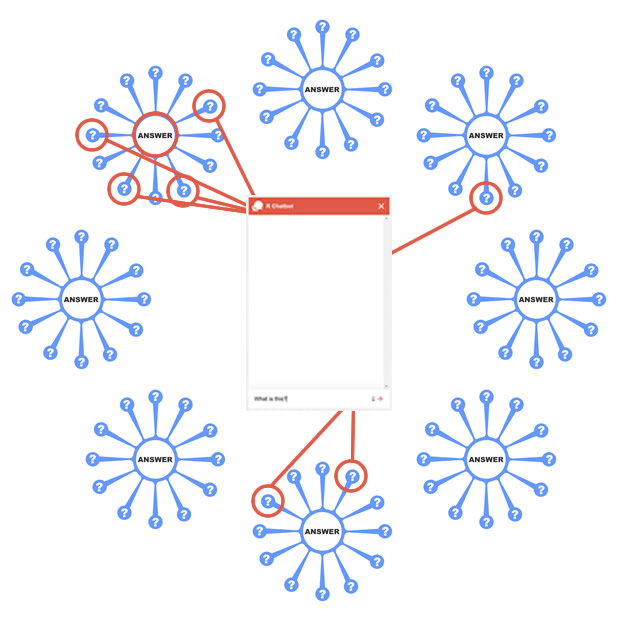

The next cost that almost anyone that wants to go online will need to pay for no matter what you do is course development. Many people think they can just get the instructors to do this – but just remember that the course will only be as good as their ability/experience in delivering online courses. You may find a few instructors that are great at it, but most at your school probably won’t fall into that category. I don’t say that as a bad thing in this context per se – most instructors don’t get trained in online course design, and even if they do, it is often specific to their field and not the general instructional design field. You will need people to make the course, which is where OPMs usually come in – but also in-house instructional designers as well.

With an average of 6-8 months lead time with a productive instructor, a quality instructional designer can complete 2-3 three quality 15 week online courses per semester. I know this for a fact, because as an instructional designer I typically completed 9 or so courses per year. And some IDs would consider that “slow.” More intense courses that are less ready to transition to online could take longer. But you can also break out of the 15 week course mindset when going online as well – just food for thought. If you are starting up a 10 course online program, you would probably want three instructional designers, with varying specialties. Why three IDs if just one could handle all ten courses in two years easily? Because there is a lot more to consider.

Once you start one online program, other programs will most likely follow suit fairly quickly. It almost always happens that way. So go ahead and get a couple more programs in the pipeline to get going once the IDs are ready. But you also need to build up and maintain infrastructure once you get those classes going. How do you fix design problems in the course? When do you revise general non-emergency issues? What about when you change instructors? And who trains all of these instructors on their specific course design? What about random one-off courses that want to go online outside of a program? Who handles course quality and accreditation? And so on. Quality, experienced instructional designers can handle all of these and more, even while designing courses. Especially if you get one that is a learning engineer or that at least specializes in learning engineering, because these infrastructural questions are part of their specialty.

The salary and benefits range of an instructional designer is between 50K-100K a year depending on experience and the cost of living where you are located. These are also positions that can work remotely if you are open to that – but you will want at least one on campus so they can talk to your students for feedback on the courses they are designing. But remote work is something to keep in mind because you also have to consider the cost of finding an office and getting computers and equipment for each new person you want to hire (either as IDs or the other positions described). Also don’t forget about the cost of benefits like health care, which is pretty standard for full-time IDs.

Another aspect to keep in mind is accreditation – that will take time and people, but that will be the case even if you go with an OPM as well. You will need to pull in people from across the campus that have experience with this, of course – but you will also have to find people that can handle this aspect regardless of what model you choose. And it can be a dozy, just FYI.

Another aspect to consider is advertising. This is a factor that will always cost, unless you are focused solely on transitioning an existing on campus program into an online one (and not planning on adding the online option to the on-campus one). But even then, if you want it to scale – you will need to advertise. Universities aren’t always the best at this. If yours is, then skip ahead. If not, you will need to find someone that can advertise your new program. Typically, this is where OPMs tend to shine. But it is also getting harder and harder to find those that will just let you pay for advertising separate from the entire OPM package.

I can’t really say what you need to spend here – but I will say to be realistic. Cap your initial courses at manageable amounts – not just for your instructors, but also for your support staff. I can’t emphasize enough that it is better to start off small and then scale up rather than open the floodgates from the beginning. Every course that I have seen that opens up the first offerings to massive numbers of students from the beginning has also experienced massive learner trauma. Don’t let companies or colleges gloss over those as “bumps in the road.” Those were actual people that were actually hurt by being that bump that got rolled over. Hurt that could have been avoided if you started small and scaled up at a manageable pace.

So while we are here, let’s talk scale. Scale is messy, no matter how you do it. Even going from one on-campus course to two on-campus courses has traditionally led to problems. All colleges have wanted to increase enrollments as much as possible since the beginning of academia, so its not like OPMs were the first to talk or try scale. However, we need to be real with ourselves about scale and the issues it can cause.

First of all, not all programs can scale. Nursing programs scale immensely because the demand for nurses is still massive. Also, nurses work their tails off, so Nursing instructors often personally take care of many problems of scale that some business models cause. I’m still not sure if the OPMs involved in those programs have even realized that is true yet. But not all programs can scale like a Nursing program can. Not all fields have the demand like Nursing does. Not all fields have the people with the mindset like Nurses have (no offense hopefully, but many of you know its true and its okay – I’m not sure if Nurses ever sleep).

All that to say – if you are not in Nursing, don’t expect to scale like Nursing can. Its okay. Just be realistic about. Also, be honest about any problems that are happening. Glossing over problems will only cause more problems in no time. Always have your foot on the brake, ready to stop the scaling before issues spiral out of hand.

Remember: education is a human endeavor, and people don’t react well to being herded like cattle. I feel like I have only touched the surface and left out so much, but I am as tired of typing as you probably are of reading. Hopefully this is giving some food for thought for the people that have been wondering about in-house program development.

So why go in-house development rather than OPM? Well, I have been making the case for the cost-saving benefits plus capacity-building benefits as well. Recently I read about an OPM that wanted to charge $600,000 to build one 10 course program. All that I have outlined here plus stuff I left out would easily half of that for a high-quality program. And I am one of those people that usually advocates for how expensive online courses can be to do right. But even I am thinking “Whoa!’ at $600K.

Look, if you are wanting to build a program in a field like Nursing that can realistically scale, and you want to deal with thousands of students being pushed through a program (along with all the massive problems that will bring), then you are probably one of five schools in the nation that fit that description and OPMs are probably the best bet for you. For the other 3000-4000+ institutions in the nation, here are some other factors to consider:

- Hiring people usually means some or all of those people will live in your community, thus supporting local economies better.

- Local people means people on your campus that can interact with your students and get their input and perspective.

- Having your people do things also typically means more opportunities to hire students as GTAs, GRAs, assistants, etc – giving them real world skills and money for college.

- When your academics and your GRAs are part of something, they usually research it and publish on it. The impact on the global knowledge arena could be massive, especially if you publish with OER models.

- Despite what some say, HigherEd is constantly evolving. Not as fast as some would like, but it is happening. When the next shift happens, you will have the people on staff already to pivot to that change. If not, that will be another expensive contract to sign with the next OPM.

The last point I can’t emphasize enough. When the MOOC craze took off, my current campus turned to some of its experienced IDs – myself and my colleague Justin – to put a large number of MOOCs online. Now that analytics and AI are becoming more of a thing in education (again), they are turning to us and other IDs and people with Ed-Tech backgrounds on campus as well. For people that went the OPM route, these would all be more (usually expensive) contracts to buy. For our campus, it means turning to the people they are already paying. I don’t know what else to say to you if that doesn’t speak for itself.

Also, keep in mind that those who are not in academia don’t always understand the unique things that happen there. Recently I saw a group of people on Twitter upset about a college senior that couldn’t graduate because the one course they needed wasn’t offered that semester. The responses to this scenario are those that many in academia are used to hearing: “bet there is a simple software fix for this!” “what a money making scam!” “if only they cared to treat the student like a customer, they wouldn’t make this happen!” The implication is that the problem was on the University’s side for not caring about course scheduling enough to make graduation possible. Most people in academia are rolling their eyes at this – it is literally impossible for schools to get programs accredited if they don’t prove that they have created a pathway for learners to graduate on time. It makes good business sense that not all courses can be offered every semester, just like many business do not sell all products year round (especially restaurants). Plus, most teachers will tell you it is better to have 10 students in a course once a year than 2-3 students every semester – more interaction, more energy, etc. But schools literally have to map out a pathway for these variable offerings to work in order to just get the okay for the courses in the first place. Those of us in academia know this, but it seems that, based on what I saw on Twitter recently, many in the OPM space do not know this. We also know that there is always that handful of students that ignore the course offering schedules posted online, the advice of their advisers, and the warnings of their instructors because they think they can get the world to bend to their desires. I remember in the 90s telling two classmates they wouldn’t graduate on time if they weren’t in a certain class with me. They scoffed, but it turns out they in fact did not graduate on time. So something to keep in mind – outside perspectives and criticism can be helpful, but they can also completely misunderstand where the problems actually lie.

And look, I get it – there will always be institutions that prefer to get a “program in a box” for one fee no matter how large it is. If that is you, then more power to you. There are a few things I would ask if you go the OPM route: first of all, please find a way to be honest and open about the pros and cons of working with your OPM. They may not like it, but a lot of the backlash that OPMs are currently facing comes from people just not buying the “everything is awesome” line so many are pushing. The education world needs to know your successes as well as your failures. Failure is not a bad thing if you grow from it. Second, please keep in mind that while the “in-house” option looks expensive and complicated, going the OPM route will also be expensive and complicated. They can’t choose your options for you, so all the meetings I discuss here will also happen within an OPM model, just with difference people at the table. So don’t get an inflated ego thinking you are saving time or money going that route. Building a company is much different from building a degree program, so don’t buy into the logic that they are saving you start-up funds. They had to pay for a lot of things as a for-profit company that HigherEd institutions never have to pay for.

![]() Finally, though, I will point out how you can also still sign contracts with various vendors for various parts of your process while still developing in-house, like many institutions have for decades. This is not always an all-or-nothing, either/or situation (see the response from Matthew Rascoff here for a good perspective on that, as well as Jonathan D. Becker’s response at the same link as a good case for in-house development). There are many companies in the OPM space that offer quality a la carte type services for a good price, like iDesign and Instructional Connections. Like I have said on Twitter, I would call those OPS (Online Program Support) more than OPM. Its just that this term won’t catch on. I have also heard the term OPE for Online Program Enablers, which probably works better.

Finally, though, I will point out how you can also still sign contracts with various vendors for various parts of your process while still developing in-house, like many institutions have for decades. This is not always an all-or-nothing, either/or situation (see the response from Matthew Rascoff here for a good perspective on that, as well as Jonathan D. Becker’s response at the same link as a good case for in-house development). There are many companies in the OPM space that offer quality a la carte type services for a good price, like iDesign and Instructional Connections. Like I have said on Twitter, I would call those OPS (Online Program Support) more than OPM. Its just that this term won’t catch on. I have also heard the term OPE for Online Program Enablers, which probably works better.